HS-5010: Reliability Analysis of an Optimum

Tutorial Level: Intermediate In this tutorial, you will perform a reliability analysis to determine how sensitive the objective is to small parameter variations around the optimum.

The objective has been minimized to superimpose the computed values to the reference.

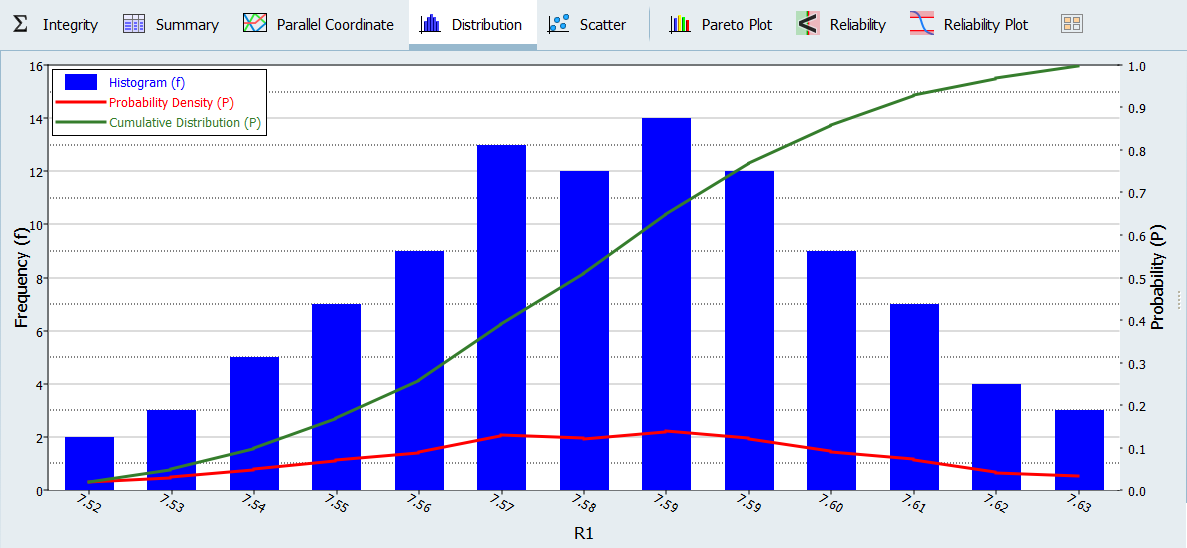

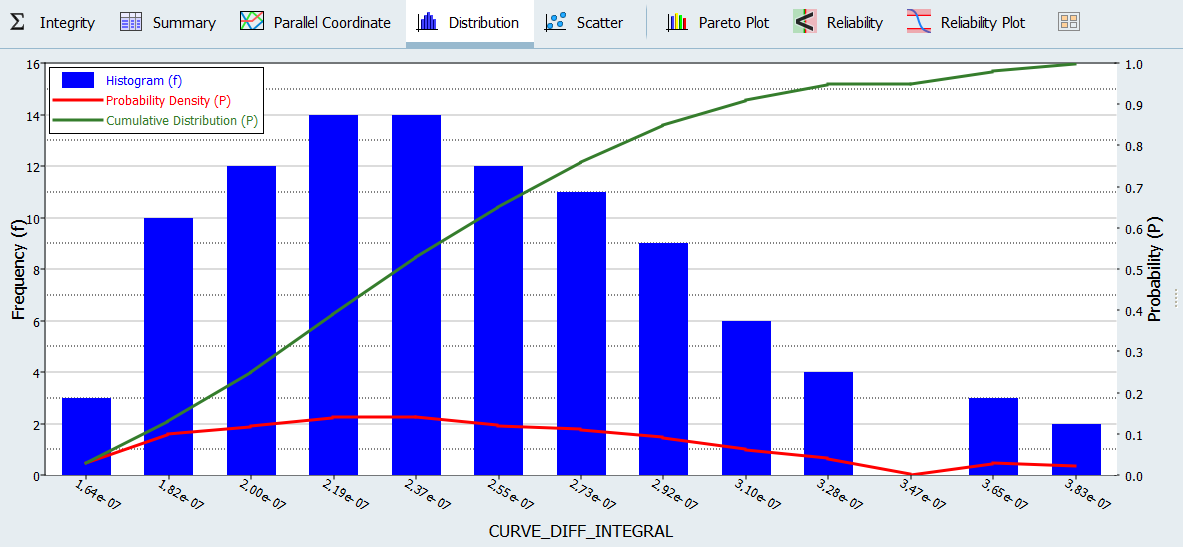

In a Stochastic study, the parameters are considered to be random (uncertain) variables. This means parameters could take random values following a specific distribution (as seen in the normal distribution in Figure 1) around the optimum value (µ). The variations are sampled in the space and the designs are evaluated to gain insight into the response distribution.

Run Stochastic

In this step, you will check the reliability of the optimal solution found with GRSM. You will use Normal Distribution for the parameter variations and MELS DOE for the space sampling.

-

Add a Stochastic.

- Go to the Define Input Variables step.

-

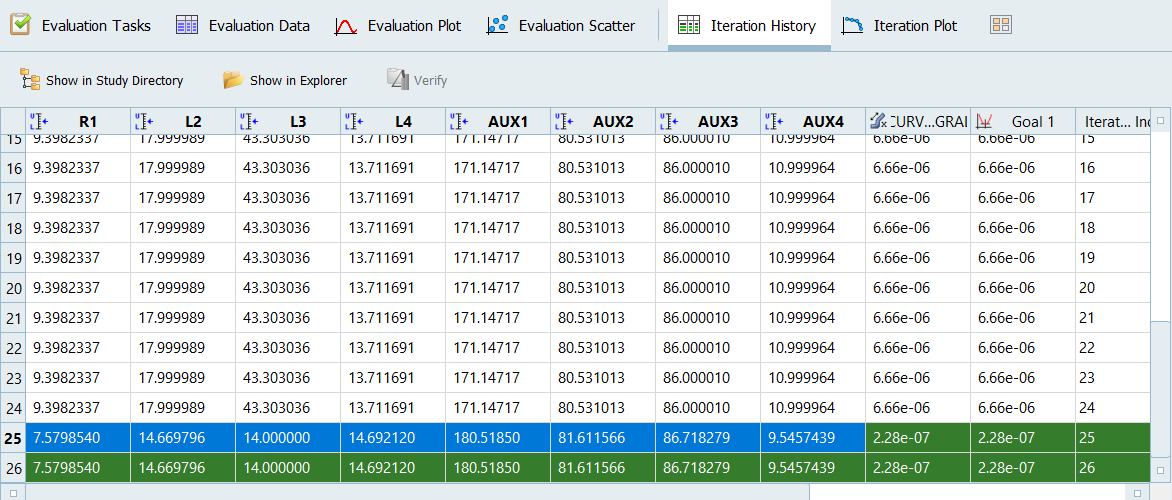

In the Nominal column, copy the parameter values at the optimal design.

-

Go to the Distributions tab.

- In the Distribution column, verify the distribution type is set to Normal Variance.

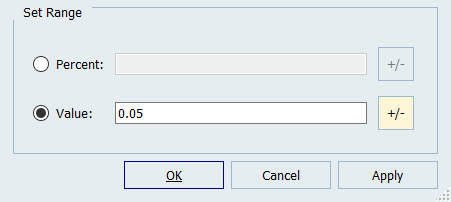

For Stochastic studies, you must provide data about the standard variation (or variance ) of parameters to account for uncertainties. This data should be defined in the 2 column of the Distributions tab. By default, is computed in HyperStudy using the range rule which is a function of the DVs bounds. When you do not have reliable data about the standard deviation, you can modify the default by modifying the upper and lower bounds of the parameters, as is done in step 5.

-

Highlight all variables and then click Edit Selected

Bounds tab.

The values in the 2 column (variance) of the Distributions tab are updated.

-

Go to the Specifications step.

- In the work area, set the Mode to Mels.

- Click Apply.

-

Go to the Evaluate step.

- Click Evaluate Tasks.

Post-Process Stochastic Results

In this step, you will review the evaluation results within the Post-Processing step.

-

Go to the tab.

- Optional:

Review evaluation fluctuations.

- Go to tab.

- Using the Channel selector, select the CURVE_DIFF_INTEGRAL label.

-

Review the historgrams of the Stochastic

results

-

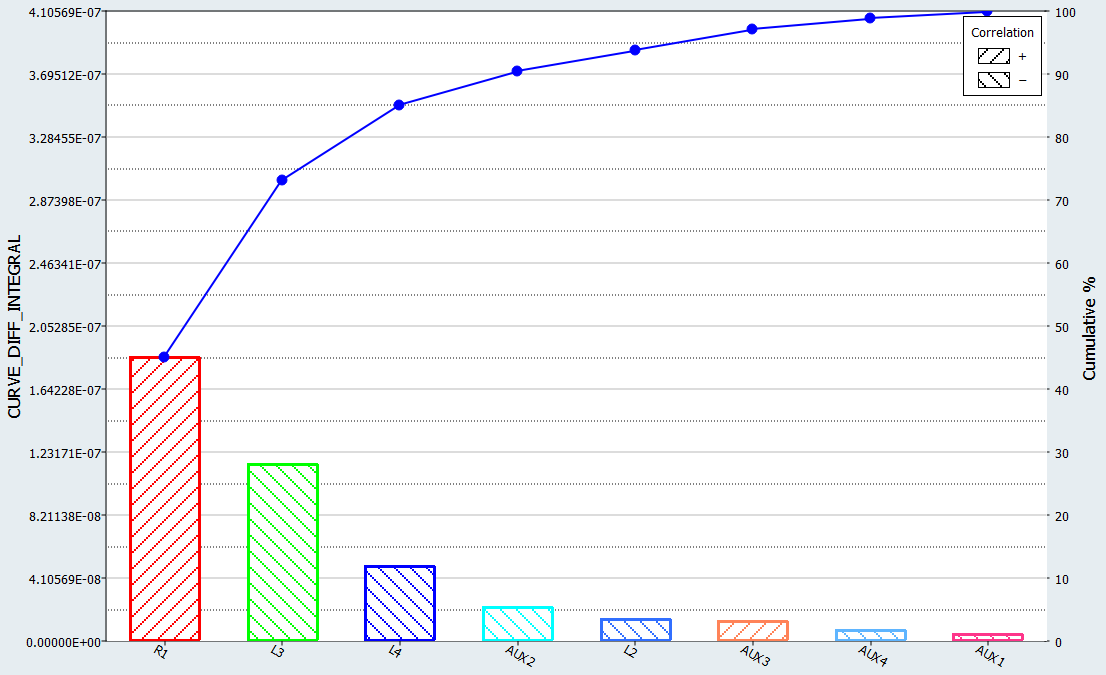

Click the Pareto Plot tab.

- From the Channel selector, select Options.

- Enable the Effect curve checkbox.

The dashed lines indicate the effect. For example, R1 has a positive effect on the response meaning the response increases higher than the optimum for R1 values. A high response value means worse matching between the computed and reference curves.Figure 7.

-

Estimate the probability of failure for the output responses (probability for

an output response to violate a user selected bound).

- Click the Reliability tab.

- Click Add Reliability.

The bound value is chosen with respect to the most probable value the response would take. It is higher than the optimum, but remains satisfactory as it still ensures good curves matching.