Platform and Hardware Requirements

Platforms, operating systems, and processors supported by nanoFluidX and recommended and required hardware.

Supported Platforms

Linux

nanoFluidX is available for most common Unix-based OS versions, which include GCC and GLIBC system libraries newer than 4.8.5 and 2.17, respectively. Data on library versions for GCC and GLIBC can be found on DistroWatch.nanoFluidX for Linux is distributed with OpenMPI 4.1.6 and NVIDIA CUDA 11.6.2 libraries. A compatible NVIDIA graphics driver (version 450.80.02 or newer) must be installed on the system.

| Distribution | GCC | GLIBC |

|---|---|---|

| RHEL 8.x | 8.4.1 | 2.28 |

| CentOS 8.x | 8.4.1 | 2.28 |

| CentOS 7.x | 4.8.5 | 2.17 |

| SLES 15 SP3 | 4.8.5 | 2.22 |

| Ubuntu 18.04 | 7.3.0 | 2.27 |

| Ubuntu 16.04 | 5.3.1 | 2.23 |

Windows

nanoFluidX is available for Windows 10. nanoFluidX is distributed with NVIDIA CUDA 11.6.2 libraries. A compatible NVIDIA graphics driver (version 452.39 or newer) must be installed on the system.For WDDM driver mode, the GPU is a shared resource and heavy GPU usage for display output (pre- and post-processing) can impair performance. For more information, refer to Windows Driver Mode on this page.

System Tests

It is recommended that you assess the new set of system tests added to the nanoFluidX distribution environment in order to prevent improper setup issues. These tests are intended for an environment where nanoFluidX is run for the first time or if you are new to nanoFluidX and not familiar with requirements. The system tests do not launch nanoFluidX itself and serve to distinguish between issues with the environment and those with nanoFluidX case setups.- On Linux:

- Set nanoFluidX environment using

source /path/to/the/set_NFX_environment.sh - They are executed through a python script. Write

python3 ./system_tests.pyto launch the tests.

- Set nanoFluidX environment using

- On Windows:

- Run the system tests via

system_tests.ps1in PowerShell orsystem_tests.batin Command Prompt.

- Run the system tests via

nvidia-smiis run to identify the available driver version and compare it with the required minimum. A value equal or newer than the required will result in a success prompt.- An executable tries to allocate and deallocate memory on each available GPU. A success prompt is shown for each GPU that is accessible and passes the test.

- In addition to the above, only on Linux, a ping-pong MPI test is also performed between a number of ranks equal to the number of available GPUs. The choice of ranks is left to the system. Both host-host and device-device ping-pong tests are conducted by sending a data package equivalent to 1 MB in size. A bandwidth exceeding or equal to the suggested value will result in a meets recommended level prompt.

- System information provided through

systeminfo - Contents of

PATHenvironment variable - Output of

nvidia-smi - Output of

nFX_SP --help - The following files of the directory content of the case.

- Used_casefile.cfg

- restart.txt

- overlapping.txt

- LOG

- PHASEINFO

- PROBE

- Set the nanoFluidX environment through the relevant script, depending on the command line interface.

- On Windows PowerShell:

check_nFX.ps1 -casedir /path/to/case/directory -outputdir /path/to/output/directory. - On Command Prompt:

check_nFX.bat -casedir /path/to/case/directory -outputdir /path/to/output/directory.

The output is a ZIP file named nfx_check_TODAY.zip, where TODAY is replaced with current date. It is recommended to wrap paths including blank spaces with quotation marks.

Windows Driver Mode

- WDDm: On workstations and laptops, this is usually the default mode. This driver mode allows shared usage of the NVIDIA GPU for display output and GPGPU computing.

- TCC: This driver mode uses the NVIDIA GPU for GPGPU computing exclusively. It is only available on NVIDIA Tesla, NVIDIA Quadro or NVIDIA GTX Titan GPUs and is typically the default on most recent NVIDIA Tesla GPUs. As the GPU is not available for display output in this mode, the machine requires to be either headless or have a second (typically onboard GPU) available for display purposes.

nvidia-smi -g {GPU_ID} -dm {0|1} nvidia-smi without any arguments. Pass 0 to

-dm option for WDDM mode and 1 for TCC mode. For example, to

change to TCC mode in a single GPU system use the following

command:nvidia-smi -g 0 -dm 1Although TCC driver mode is geared toward aiding GPGPU computing, the results depend on the hardware configuration and our limited tests have not shown a distinct advantage in using TCC mode as of version 2021.2.

A simple case run on a NVIDIA Quadro P2000 (mobile GPU) in TCC mode required approximately three times the wall clock time spent in WDDM mode, although utilization in TCC mode was twice that of WDDM mode. This may suggest that TCC mode is not suitable for mobile devices and could lead to throttling. A test on a NVIDIA Quadro RTX 6000 (desktop GPU) showed no statistically significant difference between WDDM and TCC driver modes.

Hardware Requirements

Minimum Requirements

- CUDA-enabled GPU

- Number of CPU cores should at least equal the number of GPU devices. Ideally, the number of CPU cores will slightly exceed the number of available GPU devices to ensure some computational overhead for system operations.

- Recommended RAM must be at least equal to the RAM of GPUs combined.

- 3TB HDD space (long-term storage) or 500GB for operational drive.

- Common nanoFluidX output can vary from 20 to 400 GB, depending on the size of the case, desired output, and frequency of the output.

- High speed interconnect for multi-node systems, for example, Infiniband.

GPU Requirements and Information

- Linux

- GPU must support Compute Capability 3.5 or higher

- Windows

- GPU must support Compute Capability 6.0 or higher

- Load Balancing

- To make full use of GPUs at all times, a dynamic load balancing (DLB) scheme has been developed. The DLB implementation was successfully tested with hundreds of GPUs on Tokyo Tech’s Tsubame 2.5 GPU supercomputer. DLB allows for optimal utilization of all GPUs at any given time of the simulation. Load balancing is turned on by default for all nanoFluidX simulations.

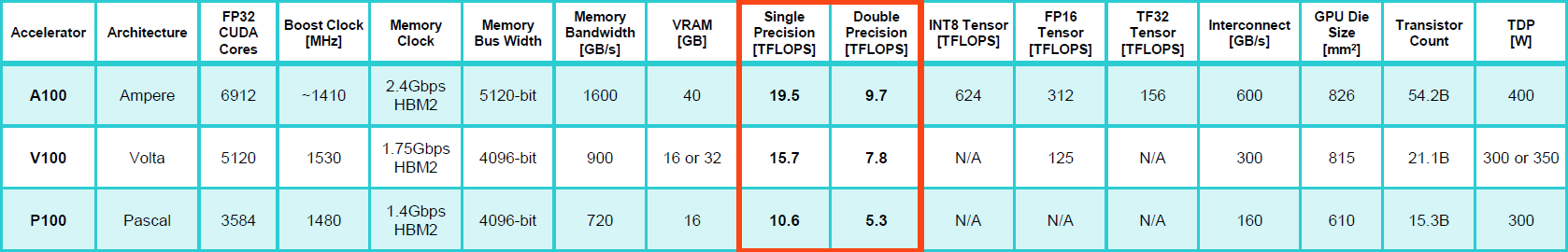

- GPU Recommendation

- NVIDIA Enterprise GPUs, primarily the Tesla, Quadro and RTX series, are

recommended as they are well established GPU cards for HPC applications

and nanoFluidX has been thoroughly tested on

them.Note: Despite good performance in single precision, the Quadro and RTX series effectively have no double precision capability. Ensure that single precision is sufficient for your needs. For more information, refer to Single versus Double Precision binaries.Important: The NVIDIA GeForce line of GPU cards are CUDA enabled. These are capable of running nanoFluidX, however, Altair does not guarantee accuracy, stability and overall performance of nanoFluidX on these cards. Be aware that the current NVIDIA EULA prohibits using non-Tesla series cards as a computational resource in bundles of four GPUs or more.Important: As support for Kepler GPUs has been discontinued by NVIDIA, it may be removed from future versions of nanoFluidX. This affects devices with compute capabilities 3.5 and 3.7 such as Tesla K80, Tesla K40 and Tesla K20. Refer to the NVIDIA website for more information. This information is also printed into the nanoFluidX log file. For the current version, there is no change in support and Kepler GPUs are still supported but deprecated.