Simulation Environment

What are the minimum hardware requirements?

- The number CPU cores should at least equal number of GPU devices. Ideally, the number of CPU cores will slightly exceed the number of available GPU devices to ensure some computational overhead for system operations.

- RAM should be at least equal to the RAM of GPUs combined.

- 3TB HDD space (long term storage) or 500GB for operational drive.

- Common nanoFluidX output can vary from 20 to 400 GB, depending on the size of the case, desired output and frequency of the output.

- High speed interconnect for multi-node systems such as Infiniband.

For more information, refer to Platform and Hardware Requirements.

Can nanoFluidX run on multiple nodes?

Yes, nanoFluidX is utilizing OpenMPI for parallelization and it is multi-node ready (provided a high speed interconnect such as Infiniband is available).

Can nanoFluidX run on a CPU-only machine?

No, NVIDIA GPUs are necessary.

Does the performance of the CPU influence the speed of the simulation?

nanoFluidX performs all heavy computations on the GPU hardware and only a small part of the code is executed on CPUs. Heavy investment in CPU power will not bring much (or any) acceleration.

Does hard-drive performance affect the simulation time?

The difference in storage speed is generally not significant.

Can I use my desktop/laptop/workstation GPU for nanoFluidX?

- Runs supported OS environment - Windows or Linux

- Contains a NVIDIA GPU that supports CUDA compute capability higher than 3.0

- Has sufficient hardware resources

For more information, refer to Platform and Hardware Requirements.

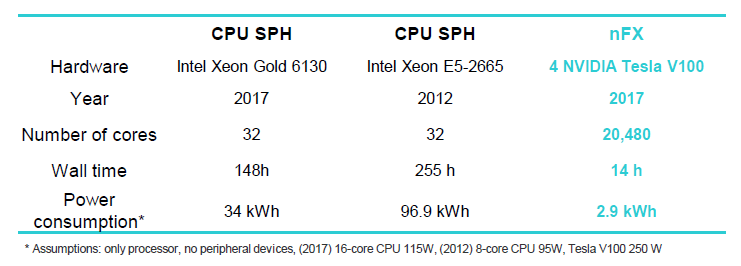

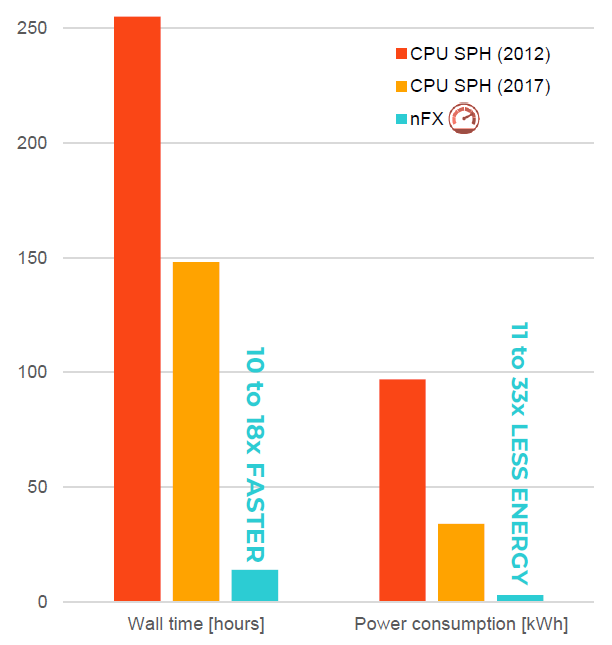

Why GPUs?

- Real DCT gearbox case

-

- 13.5 million particles

- Extremely complex geometry

- 3000 rpm

- 3.4 seconds of physical time

- Pre processing: 2 days

Why does nanoFluidX only run on NVIDIA GPU models instead of extending to all brands?

The CUDA programming language nanoFluidX requires is only supported by NVIDIA.

Will nanoFluidX be supported on Windows?

As of the 2022.1 release, nanoFluidX is supported on Windows and is limited to a single GPU.

For more information, refer to Windows in the Run nanoFluidX documentation.

Can nanoFluidX run on a virtual machine under Windows? (Linux guest, Windows host)?

No.

What is the typical run time of a nanoFluidX simulation in terms of hours?

- Use Case

- Factors

- 2 Hours

- Single phase

- 120 hours

- Multiphase

Which NVIDIA driver version do you recommend?

The latest available drivers are recommended in all scenarios.

For more information, refer to Hardware Requirements.

Does the driver version affect performance?

There might be slight improvements in newer versions, but they are not significant (maximum order of a few percent).

Do I need a CUDA installation?

No, all the required libraries are shipped with the nanoFluidX package.

Does the OS distribution/flavor affect performance?

There is no significant difference.

Which OS is recommended?

For a list of all supported OS, refer to Platform and Hardware Requirements. RHEL (and derivatives) or Ubuntu are the most used and tend to be the most thoroughly tested. As of the 2022.1 release, nanoFluidX is available on Windows.

How many particles do you recommend for the best GPU utilization?

For optimal efficiency, a least an average order of one to two million particles per GPU is recommended. When less particles per GPU are used it can result in decreased runtime.

Does nanoFluidX support any other MPI implementations?

Currently, no. There are no plans to do so in the near future.

How many GPUs do I need?

This depends on the run. Simple cases, coarse resolution, or single phase cases can be run on one or two GPUs. For what Altair considers an optimal setup, use of multiphase formulation and for peak accuracy, four GPUs are recommended to start. Eight or more GPUs are recommended for cutting edge simulation or high workloads.