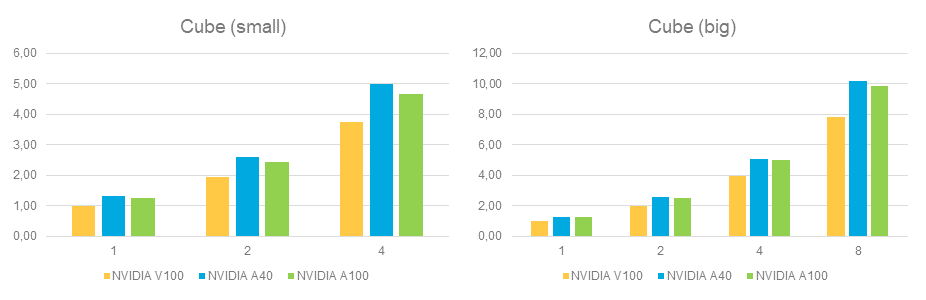

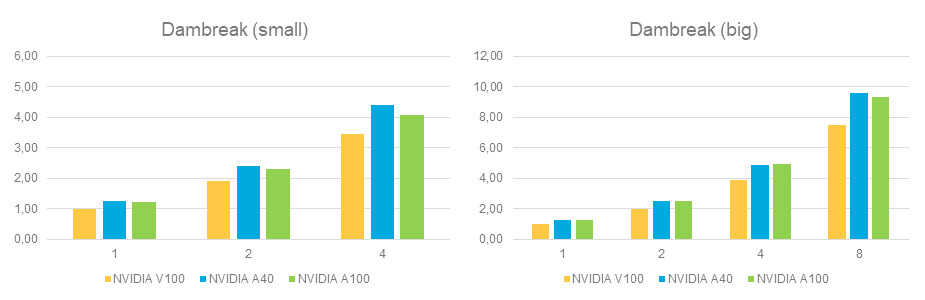

Comparison of Common GPU Models: NVIDIA V100, NVIDIA A100 and NVIDIA A40

Systems

- DGX-1

- 8x NVIDIA V100 (16 GB)

- Oracle BM.GPU4.8

- 8x NVIDIA A100 (40 GB)

- Supermicro AS 4124GS TNR

- 8x NVIDIA A40 (48 GB)

- nanoFluidX Software Stack

- nanoFluidX 2022.3 with single precision floating point arithmetics

Results

- Minimal Cube

- Simple cube of static fluid particles in rest

- Dambreak

- Collapsing water column under gravity in domain (indicated by lines)

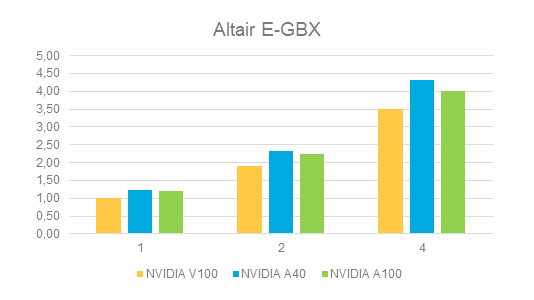

- Altair E-Gearbox

-

Figure 3.

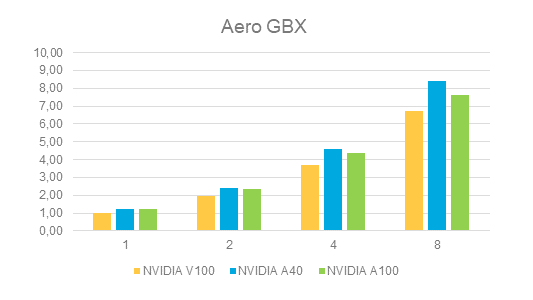

- Aerospace Gearbox

-

Figure 5.

Additional Notes

- Performance data in the graphs is always relative to one V100 on the DGX-1.

- All cases were run with the WEIGHTED particle interaction scheme.

- All cases were run in single precision.

- All solver output has been deactivated to focus on solver performance, but generally this does not change the results significantly.